Augmenting heavy and power-hungry data collection equipment with lighter, smaller wireless sensor network nodes leads to faster, larger deployments. Arrays comprising dozens of wireless sensor nodes are now possible, allowing scientific studies that aren’t feasible with traditional instrumentation. Designing sensor networks to support volcanic studies requires addressing the high data rates and high data fidelity these studies demand. The authors sensor-network application for volcanic data collection relies on triggered event detection and reliable data retrieval to meet bandwidth and data-quality demands.

Introduction

Today’s typical volcanic data-collection station consists of a group of bulky, heavy, power-hungry components that are difficult to move and require car batteries for power. Remote deployments often require vehicle or helicopter assistance for equipment installation and maintenance. Local storage is also a limiting factor stations typically log data to a Compact Flash card or hard drive, which researchers must periodically retrieve, requiring them to regularly return to each station.

The geophysics community has well established tools and techniques it uses to process signals extracted by volcanic data-collection networks. These analytical methods require that our wireless sensor networks provide data of extremely high fidelity a single missed or corrupted sample can invalidate an entire record. Small differences in sampling rates between two nodes can also frustrate analysis, so samples must be accurately time stamped to allow comparisons between nodes and between networks.

An important feature of volcanic signals is that much of the data analysis focuses on discrete events, such as eruptions, earthquakes, or tremor activity. Although volcanoes differ significantly in the nature of their activity, during the deployment, many interesting signals spanned less than 60 seconds and occurred several dozen times per day. This let us design the network to capture time-limited events, rather than continuous signals.

Sensor-Network Application Design

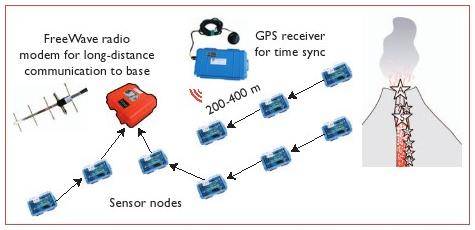

Given wireless sensor network nodes current capabilities, we set out to design a data-collection network that would meet the scientific requirements we outlined in the previous section. Before describing our design in detail, lets take a high level view of our sensor node hardware and overview the networks operation. Figure 1 shows our sensor network architecture.

Figure 1. The volcano monitoring sensor-network architecture. The network consists of 16 sensor nodes, each with a microphone and siesmometer, collecting seismic and acoustic data on volcanic activity. Nodes relay data via a multihop network to a gateway node connected to a long-distance FreeWave modem, providing radio connectivity with a laptop at the observatory. A GPS receiver is used along with a multihop time-synchronization protocol to establish a network-wide timebase.

Network Hardware

The sensor network comprised 16 stations equipped with seismic and acoustic sensors. Each station consisted of a Moteiv TMote Sky wireless sensor network node an 8-dBi 2.4-GHz external omnidirectional antenna, a seismometer, a microphone, and a custom hardware interface board. Each of 14 nodes are fitted with a Geospace Industrial GS-11 geophone a single-axis seismometer with a corner frequency of 4.5 Hz oriented vertically. The two remaining nodes with triaxial Geospace Industries GS-1 seismometers with corner frequencies of 1 Hz, yielding separate signals in each of the three axes.

The TMote Sky is a descendant of the Univeristy of California, Berkeleys Mica mote‚ sensor node. It features a Texas Instruments MSP430 microcontroller, 48 Kbytes of program memory, 10 Kbytes of static RAM, 1 Mbyte of external flash memory, and a 2.4-GHz Chipcon CC2420 IEEE 802.15.4 radio. The TMote Sky was designed to run TinyOS,3 and all software development used this environment. The TMote Sky is choosen because the MSP430 microprocessor provides several configurable ports that easily support external devices, and the large amount of flash memory was useful for buffering collected data, as we describe later.

A custom hardware board is built to integrate the TMote Sky with the seismoacoustic sensors. The board features up to four Texas Instruments AD7710 analog-to-digital converters (ADCs), providing resolution of up to 24 bits per channel.

The MSP430 microcontroller provides on-board ADCs, but theyre unsuitable for our application. First, they provide only 16 bits of resolution, whereas we required at least 20 bits. Second, seismoacoustic signals require an aggressive filter centered around 50 Hz. Because implementing such a filter using analog components isnt feasible, its usually approximated digitally, which requires several factors of oversampling. To perform this filtering, the AD7710 samples at more than 30 kHz, while presenting a programmable output word rate of 100 Hz. The high sample rate and computation that digital filtering requires are best delegated to a specialized device.

A pair of alkaline D cell batteries powered each sensor node our networks remote location made it important to choose batteries maximizing node lifetime while keeping cost and weight low. D cells provided the best combination of low cost and high capacity, and they can power a node for more than a week. Roughly 75 percent of the power each node draws is consumed by the sensor interface board, primarily due to the ADCs high power consumption. The network is monitored and controlled by a laptop base station, located at a makeshift volcano observatory roughly 4 km from the sensor network itself. FreeWave radio modems using 9-dBi directional Yagi antennae were used to establish a longdistance radio link between the sensor network and the observatory.

Typical Network Operation

Each node samples two or four channels of seismoacoustic data at 100 Hz, storing the data in local flash memory. Nodes also transmit periodic status messages and perform time synchronization, as described later. When a node detects an interesting event, it routes a message to the base station laptop. If enough nodes report an event within a short time interval, the laptop initiates data collection, which proceeds in a round-robin fashion. The laptop downloads between 30 and 60 seconds of data from each node using a reliable datacollection protocol, ensuring that the system retrieves all buffered data from the event. When data collection completes, nodes return to sampling and storing sensor data.

Sensor-Network Device Enclosures and Physical Setup

A single sensor network node, interface board, and battery holder were all housed inside a small weatherproof and watertight Pelican case, as Figure 2 shows.

Figure 2. A two-component station. The blue Pelican case contains the wireless sensor node and hardware interface board. The external antenna is mounted on the PVC pole to reduce ground effects. A microphone is taped to the PVC pole, and a single seismometer is buried nearby.

Environmental connectors are installed through the case, letting cables to be attached to external sensors and antennae without opening the case and disturbing the equipment inside. For working in wet and gritty conditions, these external connectors became a tremendous asset. Installing a station involved covering the Pelican case with rocks to anchor it and shield the contents from direct sunlight.

The antennae is elevated on 1.5-meter lengths of PVC piping to minimize ground effects, which can reduce radio range. We buried the seismometers nearby, but far enough away that they remained undisturbed by any wind-induced shaking of the antenna pole. Typically, we mounted the microphone on the antenna pole and shielded it from the wind and elements with plastic tape. Installation took several minutes per node, and the equipment was sufficiently light and small that an individual could carry six stations in a large pack. The PVC poles were light but bulky and proved the most awkward part of each station to cart around.

Network Location and Topology

We installed our stations in a roughly linear configuration that radiated away from the volcanos vent and produced an aperture of more than three kilometers. We attempted to position the stations as far apart as the radios on each node would allow. Although our antennae could maintain radio links of more than 400 meters, the geography at the deployment site occasionally required installing additional stations to maintain radio connectivity. Other times, we deployed a node expecting it to communicate with an immediate neighbor but later noticed that the node was bypassing its closest companion in favor of a node closer to the base station.

Most nodes communicated with the base station over three or fewer hops, but a few were moving data over as many as six. In addition to the sensor nodes, three Freewave radio modems provided a long-distance, reliable radio link between the sensor network and the observatory laptop. Each Freewave required a car battery for power, recharged by solar panels. A small number of Crossbow MicaZ sensor network nodes served supporting roles. One interfaced between the network and the Freewave modem and another was attached to a GPS receiver to provide a global timebase.

Design issues of deploying a WSN on the active volcano

Overcoming High Data Rates: Event Detection and Buffering

When designing high-data-rate sensing applications, we must remember an important limitation of current sensor-network nodes: low radio bandwidth. IEEE 802.15.4 radios, such as the Chipcon CC2420, have raw data rates of roughly 30 Kbytes per second. However, overheads caused by packet framing, medium access control (MAC), and multihop routing reduce the achievable data rate to less than 10 Kbytes per second, even in a single-hop network. Consequently, nodes can acquire data faster than they can transmit it. Simply logging data to local storage for later retrieval is also infeasible for these applications.

The TMote Skys flash memory fills in roughly 20 minutes when recording two channels of data at 100 Hz. Fortunately, many interesting volcanic events will fit in this buffer. For a typical earthquake or explosion at Reventador, 60 seconds of data from each node is adequate.

Each sensor node stores sampled data in its local flash memory, which we treat as a circular buffer. Each block of data is time stamped using the local node time, which is later mapped to a global network time.Each node runs an event detector on locally sampled data.

Good event-detection algorithms produce high detection rates while maintaining small false-positive rates. The detection algorithms sensitivity links these two metrics a more sensitive detector correctly identifies more events at the expense of producing more false positives. Then implemented a short-term average/long-term average threshold detector, which computes two exponentially weighted moving averages (EWMAs) with different gain constants. When the ratio between the short-term average and the long-term average exceeds a fixed threshold, the detector fires.The detector threshold lets nodes distinguish between low-amplitude signals, perhaps from distant earthquakes, and high-amplitude signals from nearby volcanic activity. When the event detector on a node fires, it routes a small message to the base-station laptop. If enough nodes report events within a certain time window, the laptop initiates data collection from the entire network (including nodes that didnt report the event).

This global filtering prevents spurious event detections from triggering a datacollection cycle. Fetching 60 seconds of data from all 16 nodes in the network takes roughly one hour. Because nodes can only buffer 20 minutes of eruption data locally, each node pauses sampling and reporting events until it has uploaded its data. Given that the latency associated with data collection prevents our network from capturing all events, optimizing the data-collection process is a focus of future work.

Reliable Data Transmission and Time Synchronization

Extracting high-fidelity data from a wireless sensor network is challenging for two primary reasons. First, the radio links are lossy and frequently asymmetrical. Second, the low-cost crystal oscillators on these nodes have low tolerances, causing clock rates to vary across the network. Much prior research has focused on addressing these challenges.

A reliable data-collection protocol was developed, called Fetch, to retrieve buffered data from each node over a multihop network. Samples are buffered locally in blocks of 256 bytes, then tagged with sequence numbers and time stamps. During transmission, a sensor node fragments each requested block into several chunks, each of which is sent in a single radio message. The base-station laptop retrieves a block by flooding a request to the network using Drip, a variant of the TinyOS Trickle6 data-dissemination protocol. The request contains the target node ID, the block sequence number, and a bitmap identifying missing chunks in the block. The target node replies by sending the requested chunks over a multihop path to the base station.

Scientific volcano studies require sampled data to be accurately time stamped; in this case, a global clock accuracy of ten milliseconds was sufficient.The Flooding Time Synchronization Protocol (FTSP) is choosen to establish a global clock across our network. FTSPs published accuracy is very high, and the TinyOS code was straightforward to integrate into our application. One of the nodes used a Garmin GPS receiver to map the FTSP global time to GMT. Unfortunately, FTSP occasionally exhibited unexpected behavior, in which nodes would report inaccurate global times, preventing some data from being correctly time stamped. Were currently developing techniques to correct our data sets time stamps based on the large amount of status messages logged from each node, which provide a mapping from the local clock to the FTSP global time.

Command and Control

A feature missing from most traditional volcanic data-acquisition equipment is real-time network control and monitoring. The long-distance radio link between the observatory and the sensor network lets our laptop monitor and control the networks activity.A Java-based GUI is developed for monitoring the networks behavior and manually setting parameters, such as sampling rates and event-detection thresholds. In addition, the GUI was responsible for controlling data collection following a triggered event, moving significant complexity out of the sensor network. The laptop logged all packets received from the sensor network, facilitating later analysis of the networks operation.

The GUI also displayed a table summarizing network state, based on the periodic status messages that each node transmitted. Each table entry included the node ID; local and global time stamps; various status flags; the amount of locally stored data; depth, parent, and radio link quality in the routing tree; and the nodes temperature and battery voltage. This functionality greatly aided sensor deployment by letting a team member rapidly determine whether a new node had joined the network as well as the quality of its radio connectivity.

Early Results

The sensor network deployed at Volc¡n Reventador for more than three weeks, during which time seismoacoustic signals from several hundred events were collected. Some early observations during the 19-day deployment, data from the network 61 percent of the time was retrieved. Many short outages occurred because due to the volcanos remote location powering the logging laptop around the clock was often impossible. By far the longest continuous network outage was due to a software component failure, which took the system offline for three days until researchers returned to the deployment site to reprogram nodes manually. Finally the event-triggered model worked well. During the deployment, the network detected 230 eruptions and other volcanic events, and logged nearly 107 Mbytes of data. Figure 3 shows an example of a typical earthquake our network recorded.

Figure 3. An event captured by our network. The event shown was a volcano tectonic (VT) event and had no interesting acoustic component. The data shown has undergone several rounds of postprocessing, including timing rectification.

Conclusion

By examining the data downloaded from the network, we verified that the local and global event detectors were functioning properly. As we described, we disabled sampling during data collection, implying that the system was unable to record two back-to-back events. In some instances, this meant that a small seismic event would trigger data collection, and wed miss a large explosion shortly thereafter. We plan to revisit our approach to event detection and data collection to take this into account. Our deployment raises many exciting directions for future work. We plan to continue improving our sensor-network design and pursuing additional deployments at active volcanoes. This work will focus on improving event detection and prioritization, as well as optimizing the data collection path. We hope to deploy a much larger (100-node) array for several months, with continuous Internet connectivity via a satellite uplink.