Apache Hive is a data warehouse infrastructure that facilitates querying and managing large data sets which reside in a distributed storage system. Hive is built on top of Hadoop and developed by Facebook.

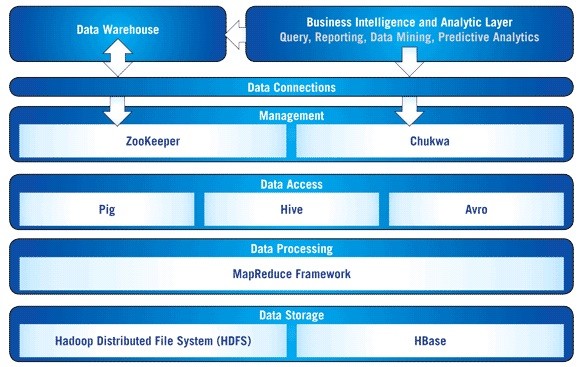

Hadoop (Read in detail) includes the Hadoop Distributed File System (HDFS) and MapReduce. It is not possible for storing a large amount of data on a single node, therefore Hadoop uses a new file system called HDFS which splits the data into many smaller parts and distribute each part redundantly across multiple nodes. MapReduce is a software framework for the analysis and transformation of very large data sets. Hadoop uses MapReduce function for distributed computation.

Hive manages data stored in HDFS and provides a query language based on SQL for querying the data. Hive looks very much like a traditional database code with SQL access. Hive provides a way to query the data using a SQL-like query language called HiveQL (Hive Query Language). Internally, a compiler translates HiveQL statements into MapReduce jobs, which are then submitted to the Hadoop framework for execution.

Hive is based on Hadoop and MapReduce operations, there are several key differences between HiveQL and SQL. The first is that Hadoop is intended for long sequential scans, and because Hive is based on Hadoop, you can expect queries to have very high latency (many minutes). This means that Hive would not be appropriate for applications that need very fast response times, as you would expect with a database such as DB2. Finally, the Hive is read-based and therefore not appropriate for transaction processing that typically involves a high percentage of write operations.

Suggested Read:

3 Things you Didn’t know Big Data could Do

Apache Hadoop – Introduction and architecture

Welcome to Apache Hive installation tutorial:

Step 1: Download and extract Hive from official site

- Create a directory named hive and download the Hive tar file from below URL: https://archive.apache.org/dist/hive/

- I am using stable version hive-2.1.0.

- Right click and extract OR use the command tar -xvf apache-hive-2.1.0-bin.tar.gz in a terminal inside the hive folder.

Step 2:

- Set path in bashrc file. Open as

# gedit ~/.bashrc # Set HIVE_HOME export HIVE_INSTALL=/home/soft/hive export PATH=$PATH:$HIVE_INSTALL/bin # HIVE conf ends

Reload bashrc file as: source ~/.bashrc

Step 3:

- Hive is built on top of Hadoop, so for Hive to interact with Hadoop HDFS, it must know the path to the Hadoop installation directory.

- Open hive-config.sh and add the below line:

export HADOOP_HOME=/home/soft/hadoop

Step 4:

- To store data into HDFS, create a directory within HDFS. This directory will be used by Hive to store all the data into HDFS.

hadoop dfs –mkdir –p /user/hive/warehouse

- Modify the folder permissions

sudo chmod -R 775 /user/hive/warehouse

Step 5:

- Very important step: you can start hive with above steps, But the issue is each time when u start hive metastore_db must be removed or renamed. But Metastore is the central repository of Apache Hive metadata. It stores metadata for Hive tables (like their schema and location) and partitions in a relational database. It provides client access to this information by using the metastore service API.

- So we need to do below configuration.

- Create a copy of hive-default.xml.template. Rename hive-default.xml(copy).template to hive-site.xml.

- Next, edit hive-site.xml

- Give full path for the metastore_db location as below.

#javax.jdo.option.ConnectionURL jdbc:derby:;databaseName=/home/soft/hive/metastore_db;create=true

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

and add below lines at the start of hive-site.xml

#system:java.io.tmpdir /tmp/hive/java system:user.name ${user.name}

Step 6:

- Before starting hive you need to ensure your Hadoop Namenode and Datanode are also started.

- Start your hive from terminal:

- soft/hive/bin/hive

Enjoy querying to Hive. Let me know if you face any issues.