You may want to read and understand Search Engines a bit (Introduction to search engines) before we deep dive into the Workings of Search Engines.

Almost daily we use search engines and get the desired result but have you ever wondered what actually happens behind the scene? How is the data stored? Where is the data stored? Does it use file system/database servers/cloud for storage? We will try to understand how a search engine works. Although it is a complex and tedious process, we are going to explain some of the important terminologies used in search engines and the basic process involved.

Data is stored in a number of files on the internet, such as ASCII files, binary files, or in databases. Search engines may vary on the way the data is stored. If the data is stored in the database the same can be queried easily to create search engines. For HTML files, graphics & PDFs search engine is an additional program.

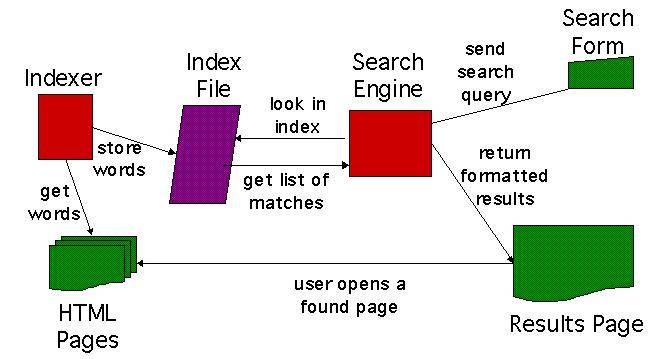

A search engine that does not have a given content, searches it elsewhere. This data comes from a program that crawls many pages and reads the contents. Such a program is called a ROBOT or a SPIDER. It crawls the URLs specified by the search engine and marks when a new one is found. Google.com differentiates the pages that have been crawled and those that have not. The search engine crawls the data indexes the pages. Whenever you search for a query, the pages that have been indexed are displayed on the results page. In the results, the search engine mostly displays the title of the related article and the excerpt.

When a user searches for something, the user is not actually searching the contents. Instead, he is searching an index of the content, the spider has found. In a database-driven site, the user performs a query on the content. An example for this could be, searching for a topic in the search box of Krazytech.

Suggested Read:

1. Simple data queries:

When data is stored in a database, simple queries are possible which is a call to the database by a middleware program based on user input. This query looks at a selected number of fields in the database. If it finds a match for the input the database returns the information to the middleware program, which generates a useful HTML display of the content that was found.

The database will be indexed for complex queries where it searches the index instead of the content. It also helps in noise word reduction, stemming and lookup tables for a content mapping.

2. Complex data queries:

Nielson’s recent summary on searching behavior suggests that if users are not successful with their first search they will not improve their search results on a second or third query (Nielsen). Since finding the correct piece of information quickly is important, complex queries are appropriate for keyword searching. They allow the user to ask that a series of conditions about their specific query be met.

3. Boolean searching:

Some search engines allow the user to specify conditions they want to be met in their search results. Boolean searching allows the user to specify groups of words, that should not appear and whether the search should be case-sensitive or not. AND or OR can be used to refine the search. These terms are logical expressions included in Boolean searching.

Most search engines allow some or the other form of Boolean searching. It includes syntax for case sensitive searching; but, some databases store their information in case-insensitive field types.

4. Pre-processed data:

In most search engines the data that the user searches is not the actual pages of information but a dataset of information about what’s contained in the pages. This is called an index. The original content is in a database and index is the second dataset.

Content indexing creates a document index that contains information about where each word is found on each page. The user performs a search on this index. The display results page translates the information found in the document index back into the information that is on the actual pages.

5. Indexing content:

Databases are sometimes given to improve performance. A search engine can be improved in terms of speed by using an index. An index is used to strip noise words out of the content.

6. Document index:

A document index is special content index. Most search engines make use of it to get responses for keywords. Information about the words in the documents allows the search engines relevancy calculation to return the best result.

7. Noise words:

To save space and time, search engines strip out words when you query the database. Some databases such as MySQL, have noise word rules built in. These general rules can be modified, additional rules can be placed on specific data sets to allow best results. The words that are stripped are called noise words. Noise words may be stripped out based on a specific list of words or length.

Search results display

- A number of records displayed:

Search results should give relevant information. They can be divided over a number of pages. Nielsen states users almost never look at the second page of search results (Nielsen). Some results may be lost by pagination. - Suggesting new spellings:

Sometimes spelling mistakes occur. To provide more chances of relevant search it suggests alternate spellings. Synonym lists present the user with an alternative word. A spellchecker can provide a list of alternate spellings of a word. - Hit highlighting:

In the search results page, the words you were searching on are sometimes highlighted in some way. Usually, the word is bold. This is hit highlighting. - Returning results for each successful query:

Often a user will search for multiple keywords. But each page should be returned only once. Instead of each successful result being displayed once, the search engine must know if a page has already been tagged as containing a result. If so, it should not be re-tagged.

CRAWLERS

Indexing a site:

When searching a website, chances are that you may not be actually searching the content, but rather a pre-formatted copy of the content. This increases the speed of each search- just as looking up a keyword in the index of a book is faster than reading the entire book. A database site may have an additional index, or it may search the content directly. An HTML site will need to enter all of its content into an index for the search engine to search.

With HTML sites the content is usually crawled at specific intervals of time. If new information is added, it will not appear in the search engine until the site has been re-indexed. Database sites that require an additional search engine usually have content stored in multiple tables.

This gives some approximation of a page’s importance or quality. PageRank extends this idea by not counting links from all pages equally, and by normalizing by the number of links on a page. PageRank is defined as follows:

We assume page A has pages T1…Tn which point to it (i.e., are citations). The parameter d is a damping factor which can be set between 0 and 1. We usually set d to 0.85. (There are more details about d in the next section). Also, C(A) is defined as the number of links going out of page A. The PageRank of page A is given as follows:

PR(A) = (1-d) + d (PR(T1)/C(T1) + … + PR(Tn)/C(Tn))

The below image shows the indexing status for a website by Google search engine. It shows the total number of web pages, images and the indexed pages, images. This detail can be found in the google webmasters tool (search console). You can also restrict certain web pages if you do not want search engines to crawl them. This can be done either with the help of robot.txt or by using meta tags.

Example: <meta name="robots" content="noindex" />

Major Data Structures used to store data by Search engines:

BigFiles:

Big Files are virtual files spanning multiple file systems and are addressable by 64-bit integers. The allocation among multiple file systems is handled automatically. The BigFiles package also handles allocation and deallocation of file descriptors, since the operating systems do not provide enough for our needs.

Repository:

The repository contains the full HTML of every web page. Each page is compressed using zlib. The choice of compression technique is a tradeoff between speed and compression ratio. We chose zlib’s speed over a significant improvement in compression offered bzip. In the repository, the documents are stored one after the other and are prefixed by docID, length, and URL.

Document Index:

The document index keeps information about each document. It is a fixed width ISAM (Index sequential access mode) index, ordered by docID. The information stored in each entry includes the current document status, a pointer into the repository, a document checksum, and various statistics. If the document has been crawled, it also contains a pointer to a variable width file called docinfo which contains its URL and title. Otherwise, the pointer points into the URLlist which contains just the URL.

Lexicon:

The lexicon has several different forms. The current lexicon contains 14 million words. It is implemented in two parts – a list of the words and a hash table of pointers.

Hit Lists:

A hit list corresponds to a list of occurrences of a particular word in a particular document including position, font, and capitalization information. Hit lists account for most of the space used in both the forward and the inverted indices. Because of this, it is important to represent them as efficiently as possible. Google considers several alternatives for encoding position, font, and capitalization simple encoding, a compact encoding, and Huffman coding.

Forward Index:

The forward index is actually already partially sorted. It is stored in a number of barrels. Each barrel holds a range of wordID’s. If a document contains words that fall into a particular barrel, the docID is recorded into the barrel, followed by a list of wordID’s with hit lists which correspond to those words. Instead of storing actual wordID’s, it stores each worded as a relative difference from the minimum worded that falls into the barrel the worded is in.

Inverted Index:

The inverted index consists of the same barrels as the forward index, except that they have been processed by the sorter. For every valid wordID, the lexicon contains a pointer into the barrel that wordID falls into. It points to a doclist of docID’s together with their corresponding hit lists. This doclist represents all the occurrences of that word in all documents.

Thank you for your information.

You are welcome Dhanalakshmi.

What is the conclusion for this topic?

Hi,

You can conclude by explaining how much we are dependent on search engines, the role it plays in our day today life and how search engine eases the work of people.

Comments are closed.