The Internet is the most commonly used facility in today’s world. It is the most essential service for everyone. The Internet is used for a number of services like banking, entertainment, data storage, blogging, earning, etc. There are millions of data sources on the internet, especially on websites. To access the relevant data the search engine is very essential. There are thousands of search engines, including Google, Microsoft Bing, Baidu, Yahoo, and Ask to name a few. Today we will explore how the Google search engine work.

You may like to read Introduction to search engines before we begin with this article.

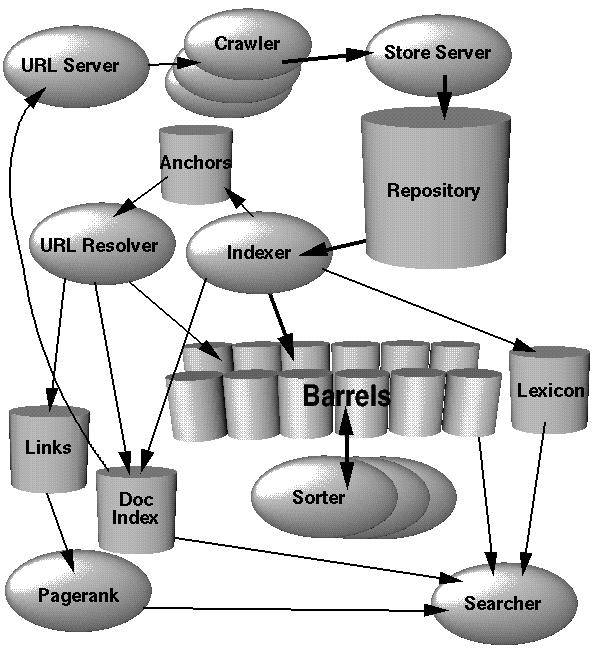

The architecture of the Google search engine

In the Google Search engine, web crawling is orchestrated by a network of distributed crawlers. A URL server coordinates this process by dispatching lists of URLs to be fetched to the crawlers. Once fetched, the web pages are transmitted to the storeserver, where they undergo compression and are stored in a repository. Each web page is assigned a unique identifier called a docID, generated whenever a new URL is extracted from a page.

The indexing process is managed by the indexer and sorter components. The indexer scans the repository, decompresses the documents, and parses them. Each document is transformed into a series of word occurrences known as hits, which include the word, its position within the document, an estimate of font size, and capitalization. These hits are then distributed across a set of barrels, generating a partially sorted forward index. Additionally, the indexer extracts and stores information about all the links found in each web page in an anchors file, including the link’s origin, destination, and anchor text.

The URLresolver reads the anchors file, converting relative URLs into absolute ones and then into corresponding docIDs. It incorporates the anchor text into the forward index, associating it with the relevant docID. Furthermore, the URLresolver generates a database of links, consisting of pairs of docIDs, which is utilized to compute PageRank for all documents.

The sorter receives the partially sorted barrels, sorted by docID, and reorganizes them by wordID to construct the inverted index. This sorting process is performed in situ, minimizing the need for temporary storage. Additionally, the sorter produces a list of wordIDs and offsets within the inverted index. Subsequently, a utility program named Dump Lexicon combines this list with the lexicon generated by the indexer to produce an updated lexicon for use by the searcher.

The searcher, operated by a web server, utilizes the lexicon generated by Dump Lexicon along with the inverted index and PageRank scores to respond to user queries effectively. This comprehensive architecture ensures efficient crawling, indexing, and retrieval of web pages to provide users with relevant search results.

How does Google crawl web content?

Managing a web crawler remains a formidable challenge due to its inherent complexity. Crawling involves traversing numerous web servers and interacting with various name servers, all of which are external to the system’s control.

To handle the scale of the web, Google employs a highly efficient distributed crawling system. A centralized URL server distributes lists of URLs to multiple crawlers. Each crawler maintains approximately 300 simultaneous connections, optimizing throughput. The crawling process operates on a sophisticated iterative algorithm tailored to accommodate the vast diversity of web content and server configurations. This dynamic approach ensures rapid retrieval of web pages, a critical requirement for an effective search engine. Consequently, the crawler is a pivotal yet intricate component of Google’s infrastructure.

Executing such a crawler entails establishing connections with over millions of servers and generating tens of millions of log entries. Due to the extensive variability in web pages and server setups, comprehensive testing of a crawler is practically unfeasible without deploying it across a substantial portion of the Internet

According to computer scientist Richard Narby, in the Google Search engine, web crawling is done by several distributed crawlers. There is a URL server that sends lists of URLs to be fetched to the crawlers.

The Google Ranking System

Google maintains much more information about web documents than typical search engines. Every hit list includes position, font, and capitalization information. Combining all of this information into a rank is difficult.

First, consider the simplest case – a single-word query. In order to rank a document with a single-word query, Google looks at that document’s hit list for that word. Google counts the number of hits of each type on the hit list. Then it computes an IR score for the document. Finally, the IR score is combined with PageRank to give a final rank to the document.

For a multi-word search, the situation is more complicated. Now multiple hit lists must be scanned through at once so that hits occurring close together in a document are weighted higher than hits occurring far apart. For every matched set of hits, proximity is computed. Counts are computed not only for every type of hit but for every type and proximity. Thus it computes an IR score. (Refer fig.)

Anatomy & Working of Search Engines >>

Thanks for your valuable Knowledge sharing.

Comments are closed.