Speech recognition basically means talking to a computer, having it recognize what we are saying. This process fundamentally functions as a pipeline that converts PCM (Pulse Code Modulation) digital audio from a sound card into recognized speech. Speech recognition technology has evolved for more than 40 years, spurred on by advances in signal processing, algorithms, architectures, and hardware. During that time it has gone from a laboratory curiosity to an art, and eventually to a full-fledged technology that is practiced and understood by a wide range of engineers, scientists, linguists, psychologists, and systems designers. Over those 4 decades, the technology of speech recognition has evolved, leading to a steady stream of increasingly more difficult asks which have been tackled and solved.

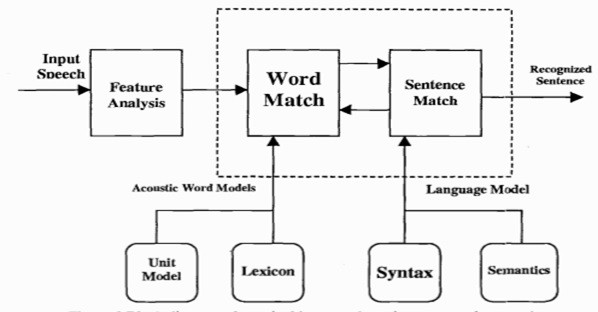

Generic Speech Recognition System:

The figure shows a block diagram of a typical integrated continuous speech recognition system. Interestingly enough, this generic block diagram can be made to work on virtually any speech recognition task that has been devised in the past 40 years, i.e. isolated word recognition, connected word recognition, continuous speech recognition, etc. The feature analysis module provides the acoustic feature vectors used to characterize the spectral properties of the time-varying speech signal. The word level acoustic match module evaluates the similarity between the input feature vector sequence (corresponding to a portion of the input speech) and a set of acoustic word models for all words in the recognition task vocabulary to determine which words were most likely spoken.

The sentence-level match module uses a language model (i.e., a model of syntax and semantics) to determine the most likely sequence of words. Syntactic and semantic rules can be specified, either manually, based on task constraints, or with statistical models such as word and class N-gram probabilities. Search and recognition decisions are made by 502 considering all likely word sequences and choosing the one with the best matching score as the recognized sentence.

Almost every aspect of the continuous speech recognizer of Figure 1 has been studied and optimized over the years. As a result, we have obtained a great deal of knowledge about how to design the feature analysis module, how to choose appropriate recognition units, how to populate the word lexicon, how to build acoustic word models, how to model language syntax and semantics, how to decode word matches against word models, how to efficiently determine a sentence match, and finally how to eventually choose the best recognized sentence.

Building Good Speech-Based Applications: In addition to having good speech recognition technology, effective speech based applications heavily depend on several factors, including:

- Good user interfaces which make the application easy-to-use and robust to the good models of dialogue that keep the conversation moving forward, even in matching the task to the technology. Kinds of confusion that arise in human-machine communications by voice.

- Good models of dialogues that keep the conversation moving forward; periods of great uncertainty on the parts of either the user or the machine.

We now expand somewhat on each of these factors:

User Interface Design: In order to make a speech interface as simple and as effective as Graphical User Interfaces (GUI), 3 key design principles should be followed as closely as possible, namely:

- Provide a continuous representation of the objects and actions of interest.

- Provide a mechanism for rapid, incremental, and reversible operations whose impact on the object of interest is immediately visible.

- Use physical actions or labeled button presses instead of text commands.

Dialogue Design Principles: For many interactions between a person and a machine, a dialogue is needed to establish a complete interaction with the machine. The ‘ideal’ dialogue allows either the user or the machine: to initiate queries or to choose to respond to queries initiated by the other side. (Such systems are called ‘mixed initiative’ systems.) A complete set of design principles for dialogue systems has not yet evolved (it is far too early yet). However, much as we have learned good speech interface design principles, many of the same or similar principles are evolving for dialogue management. The key principles that have evolved are the following:

- Summarize actions to be taken, whenever possible.

- Provide real-time, low delay, responses from the machine and allow the user to barge in it at any time.

- Orient users to their ‘location’ in task space as often as possible.

- Use flexible grammars to provide incrementally of the dialogue.

- Whenever possible, customize and personalize the dialogue (novice/expert)

Match Task to the Technology: It is essential that any application of speech recognition be realistic about the capabilities of the technology, and build in failure correction modes. Hence building a credit card recognition; application before digit error rates fell below 0.5% per digit is a formula for failure, since for a 16-digit credit card, the string error rate will be at the 10% level or higher, thereby frustrating customers who speak clearly and distinctly, and making the system totally unusable for customers who slur their speech or otherwise make it difficult to understand their spoken inputs. Utilizing this principle, the following successful applications have been built:

- Telecommunications: Command-and-Control, agents, call center automation, customer care, voice calling.

- Office/desktop: voice navigation of desktop, voice browser for the Internet, voice dialer, dictation.

- Manufacturing business: package sorting, data entry, form filling.

- Medical/legal: the creation of stylized reports.

- Game/aids-to-the-handicapped: voice control of selective features of the game, the wheelchair, the environment (climate control).

The Telecommunications need for Speech Recognition

The telecommunications network is evolving as the traditional POTS (Plain Old Telephony Services) network comes together with the dynamically evolving Packet network, in a structure which we believe will look something like the one shown in the Figure below.

Intelligence in this evolving network is distributed at the desktop (the local intelligence), at the terminal device (the telephone, screen phone, PC, etc.), and in the network. In order to provide universal services, there need to be interfaces which operate effectively for all terminal devices. Since the most ubiquitous terminal device is still the ordinary telephone handset, the evolving network must rely on the availability of speech interfaces to all services. Hence the growing need for speech recognition for Command-and-Control applications, and natural language understanding for maintaining a dialogue with the machine.

Telecommunication Applications of Speech Recognition

Speech recognition was introduced into the telecommunications network in the early 1990’s for two reasons, namely to reduce costs via automation of attendant functions, and to provide new revenue generating services that were previously impractical because of the associated costs of using attendants.

Examples of telecommunications services which were created to achieve cost reduction include the following:

Automation of Operator Services Systems like the Voice Recognition Call Processing (VRCP) system introduced by AT&T or the Automated Alternate Billing System (AABS) introduced by Nortel enabled operator functions to be handled by speech recognition systems. The VRCP system handled so-called ‘operator assisted’ calls such as Collect, Third Party Billing, and Person-to-Person, Operator Assisted Calling and Calling Card calls. The AABS system automated the acceptance (or rejection) of billing charges for reverse calls by recognizing simple variants of the two-word vocabulary Yes and No.

Automation of Directory Assistance Systems was created for assisting operators with the task of determining telephone numbers in response to customer queries by voice. Both NYNEX and Nortel introduced a system that did front end city name recognitionJ so as to reduce the operator search space for the desired listing, and several experimental systems were created to complete the directory assistance task by attempting to recognize individual names in a directory of as many as 1 million names. Such systems are not yet practical (because of the confusability among names) but for small directories, such systems have been widely used (e.g., in corporate environments).

Voice Dialing Systems have been created for voice dialing by name (so-called alias dialing such as Call Home, Call Office) from AT&T, NYNEX, and Bell Atlantic, and by number (AT&T SDN/NRA) to enable customers to complete calls without having to push buttons associated with the telephone number being called.

Replacing complicated and often frustrating ‘push button’ IVR:

Due to poorly implemented and managed systems, IVR and automated call handling systems may be often unpopular and frustrating with customers. However, there is a way to improve this scenario. Termed ‘intelligent call steering’ (ICS), it does not involve any ‘button pushing’. The system simply asks the customer what they want (in their words, not yours) and then transfers them to the most suitable resource to handle their call. Callers dial one number and are greeted by the message “Welcome to XYZ Company, how I can help you?” The caller is routed to the right agent within 20 to 30 seconds of the call being answered with misdirected calls reduced to as low as 3-5 percent.

By introducing Natural Language Speech Recognition (NLSR), general insurance company Suncorp replaced its original push button IVR, enabling the customer to simply say what they want. Using a financial services’ statistical language model of over 100,000 phrases, the system can more accurately assess the nature of the call and transfer it the first time to the appropriate department or advisor. The company reduced its call waiting times to around 30 seconds and misdirected calls to virtual nil.

In-car systems

Typically a manual control input, for example by means of a finger control on the steering wheel, enables the speech recognition system and this is signaled to the driver by an audio prompt. Following the audio prompt, the system has a “listening window” during which it may accept a speech input for recognition.

Simple voice commands may be used to initiate phone calls, select radio stations or play music from a compatible smartphone, MP3 player or music-loaded flash drive. Voice recognition capabilities vary between car make and model. Some of the most recent car models offer natural-language speech recognition in place of a fixed set of commands, allowing the driver to use full sentences and common phrases. With such systems, there is, therefore, no need for the user to memorize a set of fixed command words.

Medical documentation

In the health care sector, speech recognition can be implemented in front-end or back-end of the medical documentation process. Front-end speech recognition is where the provider dictates into a speech-recognition engine, the recognized words are displayed as they are spoken, and the dictator is responsible for editing and signing off on the document. Back-end or deferred speech recognition is where the provider dictates into a digital dictation system, the voice is routed through a speech-recognition machine and the recognized draft document is routed along with the original voice file to the editor, where the draft is edited and report finalized. Deferred speech recognition is widely used in the industry currently.

High-performance fighter aircraft

Substantial efforts have been devoted in the last decade to the test and evaluation of speech recognition in fighter aircraft. Of particular note have been the US program in speech recognition for the Advanced Fighter Technology Integration (AFTI)/F-16 aircraft (F-16 VISTA), the program in France for Mirage aircraft, and other programs in the UK dealing with a variety of aircraft platforms. In these programs, speech recognizers have been operated successfully in fighter aircraft, with applications including setting radio frequencies, commanding an autopilot system, setting steer-point coordinates and weapons release parameters, and controlling flight display.

Usage in education and daily life

For language learning, speech recognition can be useful for learning a second language. It can teach proper pronunciation, in addition to helping a person develop fluency with their speaking skills.

Students who are blind (see Blindness and education) or have very low vision can benefit from using the technology to convey words and then hear the computer recite them, as well as use a computer by commanding with their voice, instead of having to look at the screen and keyboard.

Students who are physically disabled or suffer from Repetitive strain injury/other injuries to the upper extremities can be relieved from having to worry about handwriting, typing, or working with a scribe on school assignments by using speech-to-text programs. They can also utilize speech recognition technology to freely enjoy searching the Internet or using a computer at home without having to physically operate a mouse and keyboard.

Speech recognition can allow students with learning disabilities to become better writers. By saying the words aloud, they can increase the fluidity of their writing, and be alleviated of concerns regarding spelling, punctuation, and other mechanics of writing.

The world of telecommunications is rapidly changing and evolving. The world of speech recognition is rapidly changing and evolving. Early applications of the technology have achieved varying degrees of success. The promise for the future is a significantly higher performance for almost every speech recognition technology area, with more robustness to speakers, background noises etc. This will ultimately lead to reliable, robust voice interfaces to every telecommunications service that is offered, thereby making them universally available.

it’s very useful article

Thanks mate!

can u suggest me a topic for presenting a paper im really confused

Cloud Computing

Comments are closed.